App Details

Description

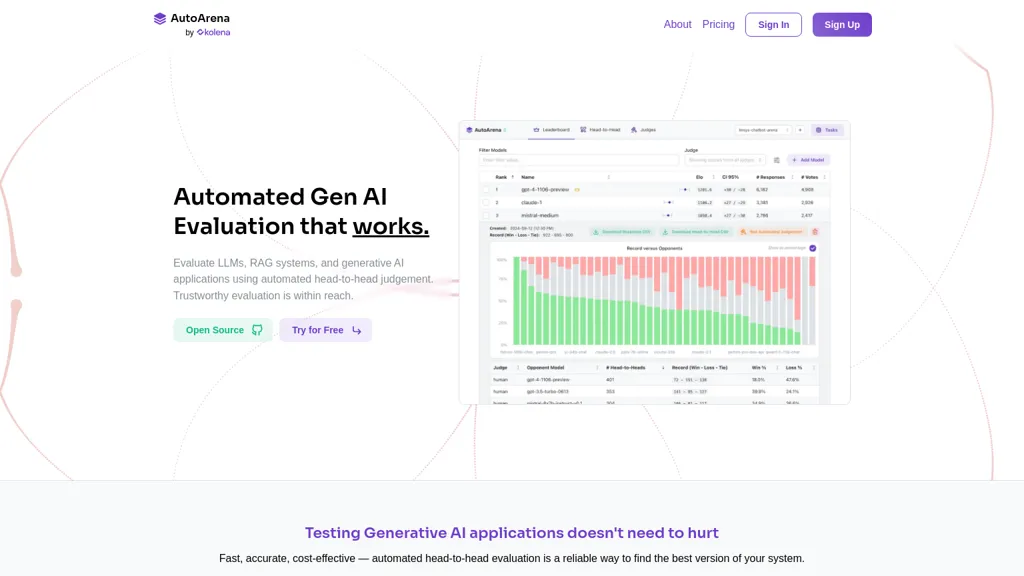

Autoarena is a specialized tool for evaluating generative AI systems, including large language models (LLMs) and retrieval-augmented generation (RAG) applications. It employs automated head-to-head judgment techniques to deliver reliable evaluations of AI outputs. Users can leverage the platform for pairwise comparisons, enabling efficient and effective assessments that enhance the precision of generative models. The tool incorporates fine-tuned judge models from various families, ensuring domain-specific accuracy. With capabilities for parallelization and randomization, Autoarena mitigates evaluation bias while optimizing resource use during testing. The open-source nature allows users—including students, researchers, and enterprises—to implement the system locally or via cloud deployments.

Technical Details

Review

Write a ReviewThere are no reviews yet.