automcp

Basic Information

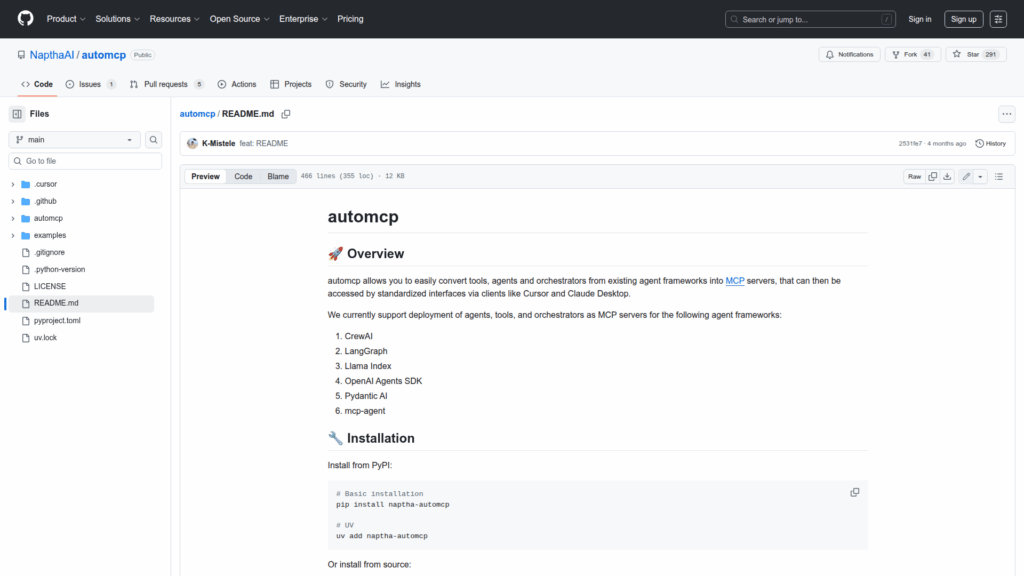

automcp is a developer tool that converts existing agent implementations, tools, and orchestrators from multiple agent frameworks into MCP servers so they can be accessed via standardized MCP clients. The project provides CLI commands to scaffold server files for a variety of frameworks including CrewAI, LangGraph, Llama Index, OpenAI Agents SDK, Pydantic AI, and mcp-agent. It generates a run_mcp.py entrypoint, adapter glue code, and example projects so maintainers can expose their agents over STDIO or SSE transports. The repository also documents how to configure packaging and runtime metadata for deployment to a hosted MCP servers-as-a-service platform and how to use client integrations such as Cursor by adding an mcp.json configuration.

Links

Stars

287

Language

Github Repository

App Details

Features

Project scaffolding and adapters that auto-generate a run_mcp.py server file and per-framework adapter functions to wrap agent instances as MCP tools. CLI helpers include automcp init to create server stubs and automcp serve to run servers with STDIO or SSE transports. Example folders demonstrate end-to-end usage for each supported framework. Utilities and guidance for packaging with pyproject settings and uv/uvx commands are provided to enable direct GitHub execution and Naptha MCPaaS deployment. Integrations instructions cover Cursor client configuration via mcp.json, notes about STDIO safety, and debugging tips like using the MCP Inspector. The repo also includes a template and instructions for adding new adapters in automcp/adapters.

Use Cases

automcp lowers the friction of making disparate agent frameworks interoperable by exposing them through a common MCP protocol, enabling clients and IDEs to run and interact with agents without per-framework client code. It streamlines development by scaffolding adapter code and a runnable server entrypoint, documents packaging requirements for cloud deployment, and supplies examples that accelerate testing and iteration. Developers benefit from built-in transport options for local integration or networked SSE, explicit guidance to avoid STDIO pitfalls, and scripted workflows for launching servers from GitHub or Naptha"s hosting. The result is faster integration of existing agents into standardized tooling and deployment pipelines.