bosquet

Basic Information

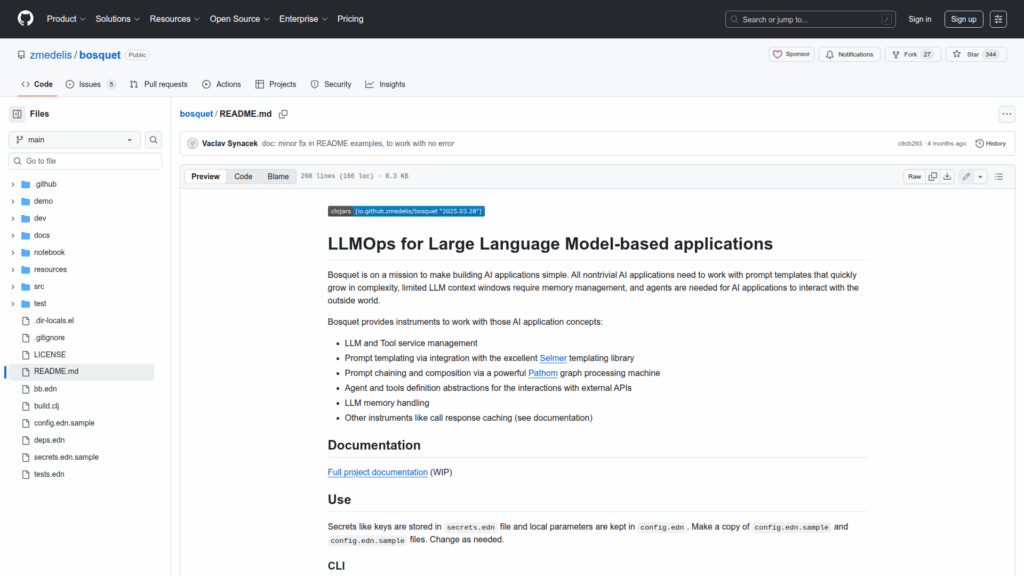

Bosquet is a Clojure LLMOps toolkit intended to help developers build production and experimental applications that use large language models. It provides primitives and conventions for managing LLM and tool services, composing and templating prompts, chaining prompt logic, and defining agents that interact with external APIs. The project targets common LLM application needs such as prompt template complexity, limited model context windows that require memory handling, and the need to combine model calls with external tools. The README includes CLI usage, examples of programmatic generation and chat flows, configuration practice using config.edn and secrets.edn, and demonstrates integrations with services like OpenAI and Ollama. The library is aimed at developers and teams building LLM-based systems rather than end users.