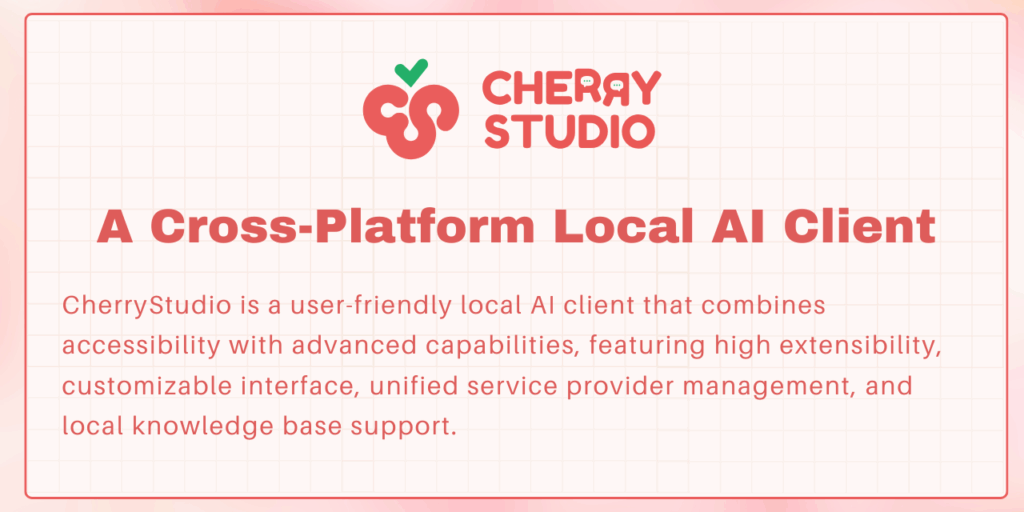

cherry studio

Basic Information

Cherry Studio is a cross-platform desktop client that provides a unified interface to multiple large language model providers and local models. It is designed for users who want to run AI assistants and manage conversational workloads on Windows, macOS, and Linux without manual environment setup. The project bundles pre-configured assistants and lets users create custom assistants, run simultaneous multi-model conversations, and process documents including text, images, Office files and PDFs. It includes file management and backup via WebDAV, a Model Context Protocol (MCP) server component, ready-made themes and UI options, and an Enterprise Edition option for private deployments with centralized model, knowledge and access management. The repository supports community contributions, developer co-creation incentives, and a public roadmap for features such as memory, OCR, TTS, plugins and mobile clients.