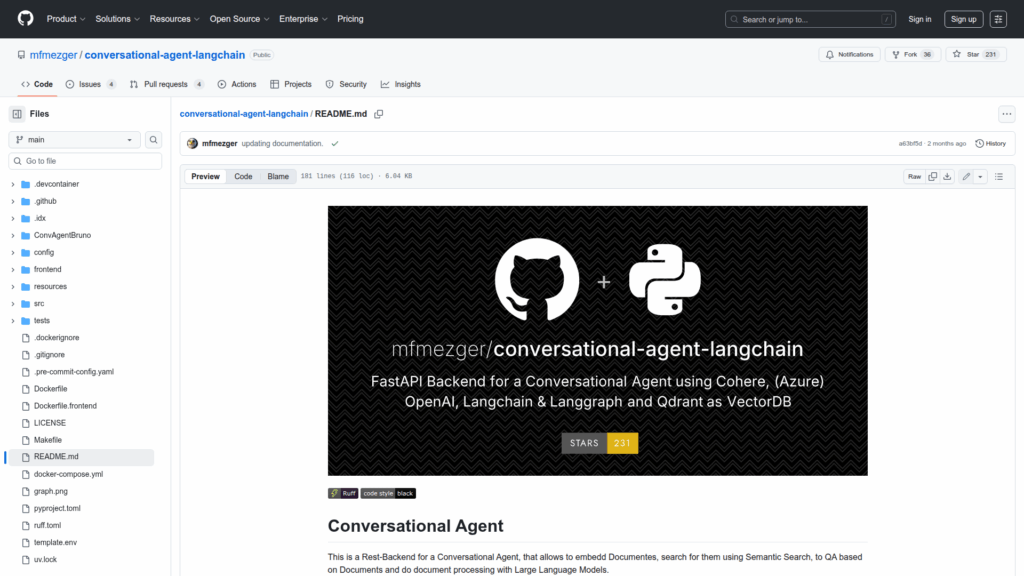

conversational-agent-langchain

Basic Information

This repository provides a REST backend implementation for a conversational retrieval-augmented generation (RAG) agent that lets you embed documents, run semantic and hybrid search, perform QA over ingested documents, and process documents using large language models. It combines a FastAPI-based API, a Qdrant vector database for storing embeddings, and support for multiple LLM providers via LiteLLM. The project includes Docker Compose orchestration for local deployment, a simple Streamlit GUI, ingestion scripts for bulk data loading, and API documentation endpoints served at /docs and /redoc. Default providers mentioned are Cohere for embeddings and Google AI Studio Gemini for generation, but LiteLLM allows other providers. The codebase also includes testing and development instructions using poetry, uvicorn, and pytest.