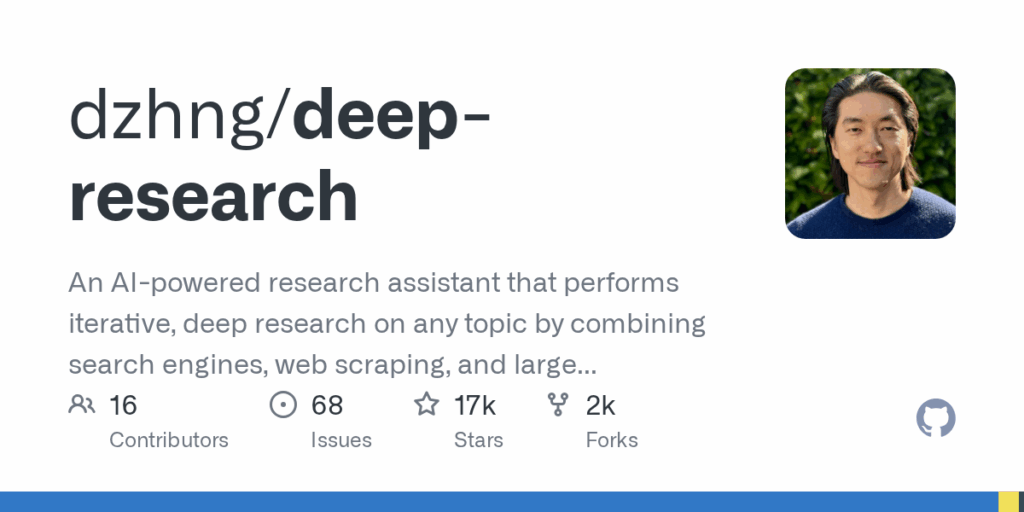

deep research

Basic Information

Deep Research is an AI-powered research assistant implemented as a compact Node.js project that performs iterative, deep research on arbitrary topics by combining search engine queries, web scraping, and large language models. The repository aims to be a minimal, easy-to-understand implementation (kept under 500 lines of code) that generates targeted SERP queries, processes and extracts content via the Firecrawl API, and synthesizes findings using OpenAI or local LLM endpoints. It supports configuring breadth and depth parameters, running in a Docker container, and switching to alternative providers such as Fireworks R1 when corresponding API keys are present. The project is designed for users who want a runnable research agent out of the box or a small base to extend for custom research workflows.