dynasaur

Basic Information

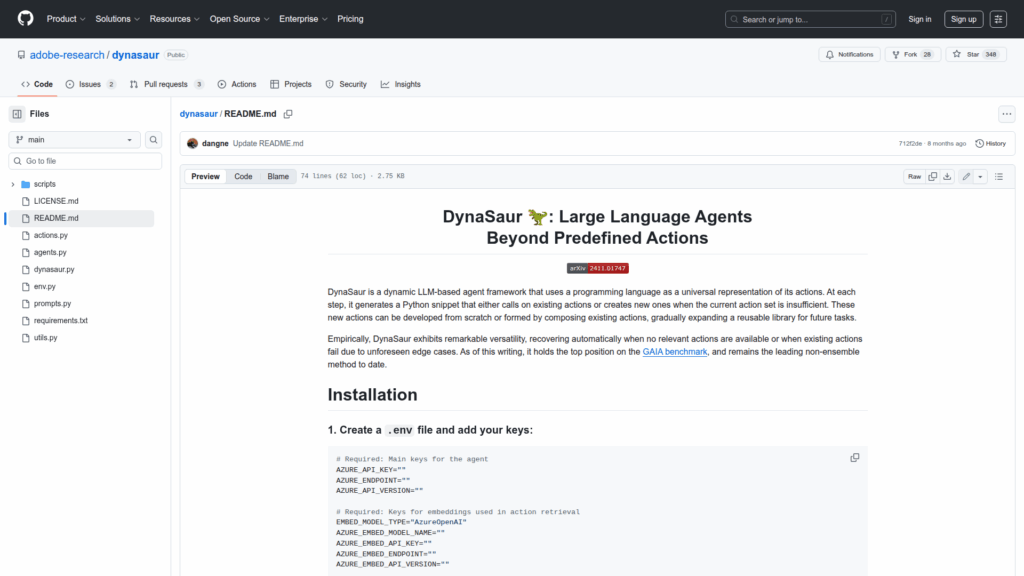

DynaSaur is a research-oriented framework for building dynamic, LLM-driven agents that use a programming language as the universal representation of actions. Instead of relying on a fixed set of declarative or prewired actions, the agent generates Python snippets at each step to invoke existing actions or to create new actions when needed. New actions can be authored from scratch or composed from existing primitives, enabling the system to expand a reusable action library over time. The repository provides the code, example entry point, and instructions to run the system, including environment variables, keys for Azure-based LLM and embedding usage, and steps to download the GAIA benchmark data. It is intended as a platform for experimentation and reproducible evaluation of dynamic action generation methods described in the linked paper.