edsl

Basic Information

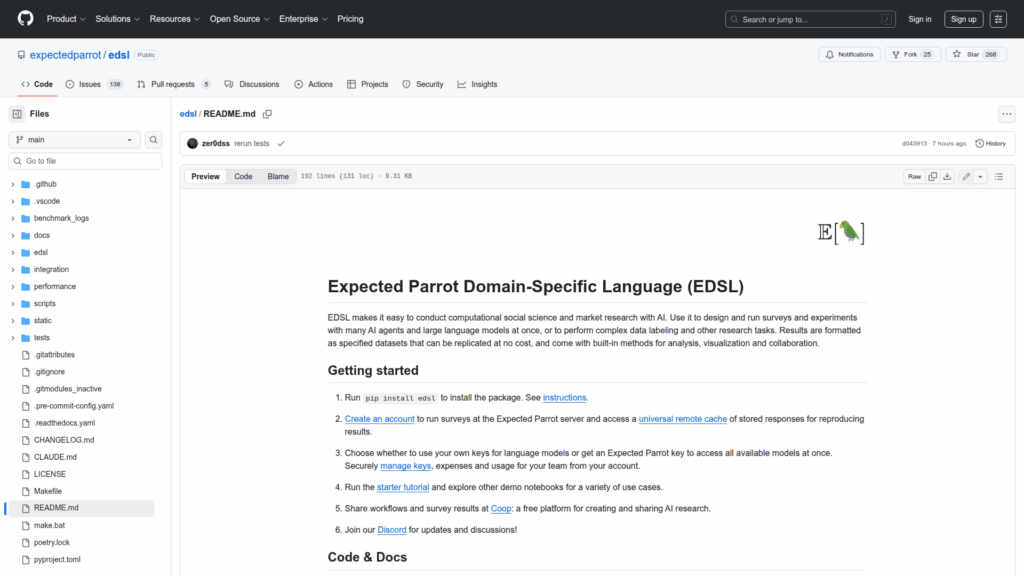

Expected Parrot Domain-Specific Language (EDSL) is a Python library for designing, running and analyzing AI-driven surveys and experiments aimed at computational social science and market research. It enables researchers to run surveys and labeling tasks with many AI agents and large language models in parallel, producing specified datasets that are reproducible. The package supports local or server execution and integrates with an Expected Parrot account and the Coop platform for sharing workflows. It is intended for teams and researchers who need to compare model responses, simulate diverse agent personas, run parameterized scenarios from multiple input formats, and produce analysis-ready outputs. Installation is via pip and the project targets Python 3.9 to 3.13. API keys for language models are required and can be managed through user accounts.