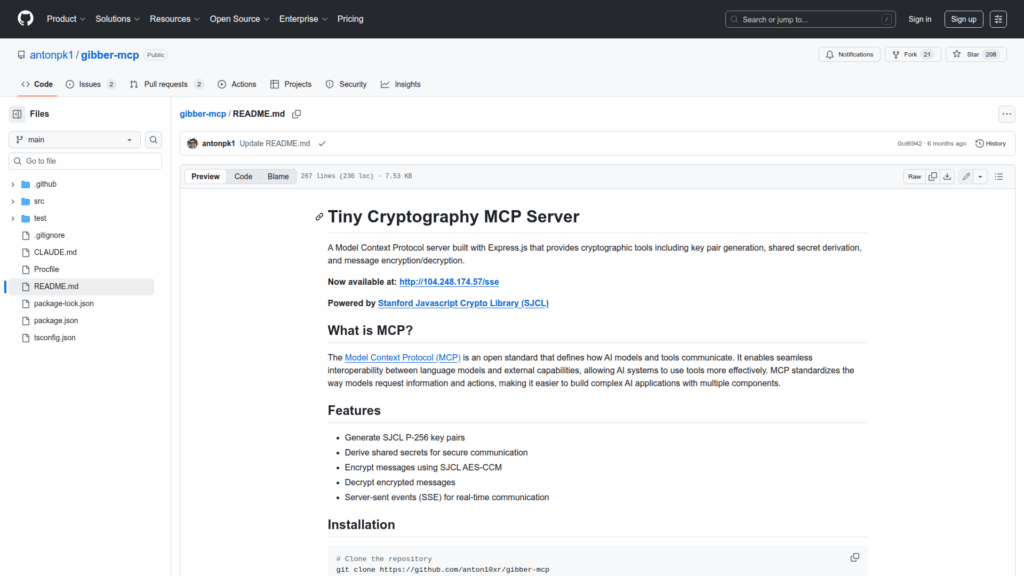

gibber-mcp

Basic Information

This repository implements a tiny Model Context Protocol (MCP) server intended for developers building interoperable AI models and tools that need secure, real-time messaging. It is an Express.js application that exposes an SSE endpoint and a message posting endpoint so LLMs or other services can connect and exchange context. The server bundles cryptographic tooling to generate SJCL P-256 key pairs, derive shared secrets, and perform AES-CCM encryption and decryption, enabling end-to-end encrypted exchanges between agents. The README includes a worked example of a Sonnet LLM thread demonstrating key exchange, derivation of a shared secret, encryption of a short message, and decryption. The project is packaged with npm scripts for development and production and is configurable via a PORT environment variable. It is licensed under MIT.