gibber-mcp

Basic Information

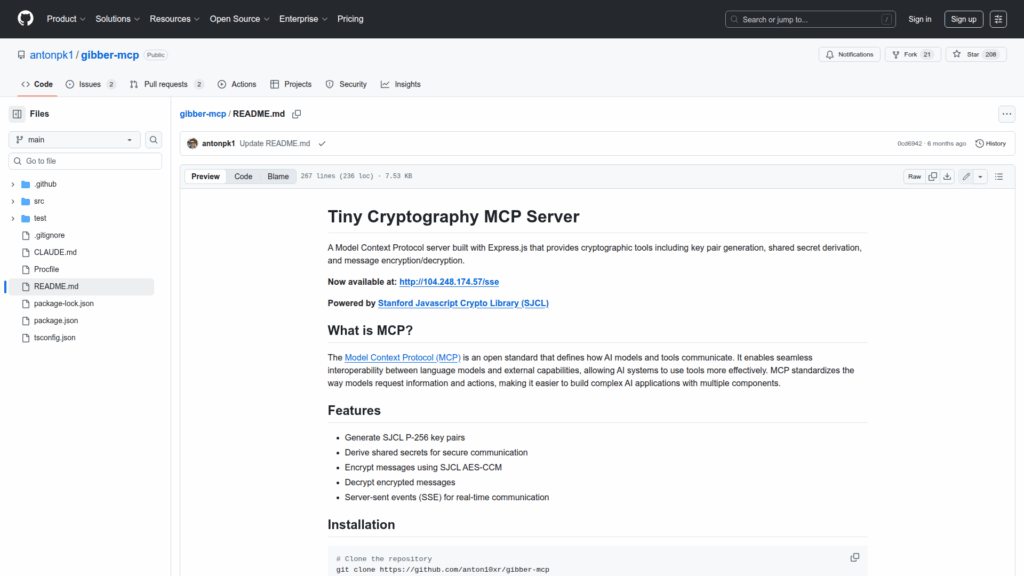

gibber-mcp is a lightweight Model Context Protocol (MCP) server implemented with Express.js that provides cryptographic primitives for secure communication between AI models and tools. It is intended as an infrastructure component for developers building MCP-compatible agents or integrating tool use into LLM-based workflows. The server exposes endpoints for server-sent events and message posting, and it bundles SJCL-based cryptography utilities so models can generate P-256 key pairs, derive shared secrets, and perform AES-CCM encryption and decryption during runtime. The README includes a concrete Sonnet 3.7 LLM thread demonstrating key exchange, encryption, and decryption flows. The project is designed to be run locally or in production with standard npm scripts and a configurable PORT environment variable.