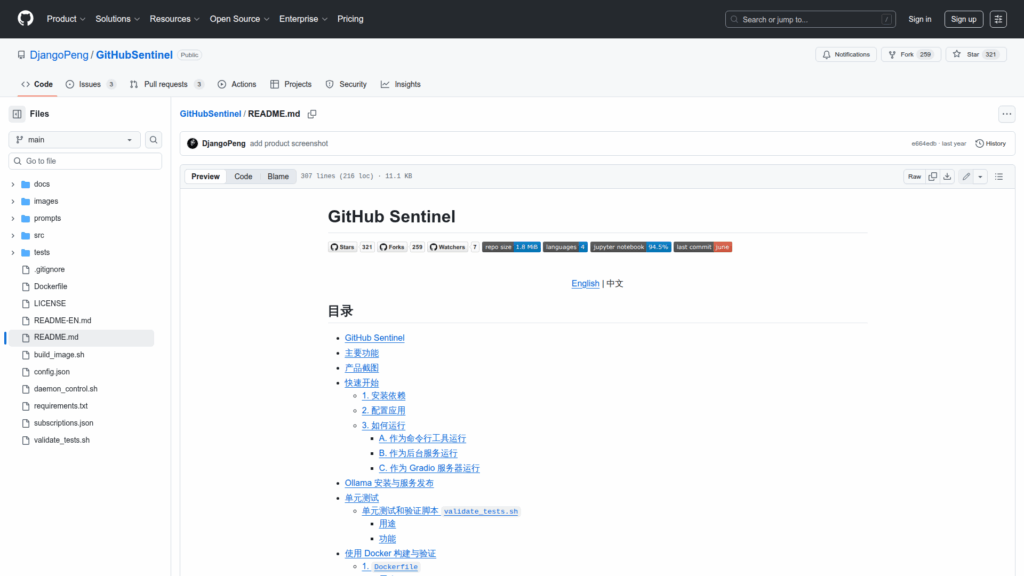

GitHubSentinel

Basic Information

GitHub Sentinel is an AI Agent for automated information retrieval and high-value content mining in the era of large language models. It is designed to help users who need frequent, large-scale updates from public information channels, with an initial focus on GitHub repositories and extendable support for Hacker News topics and trends. The project collects repository activity such as commits, issues, and pull requests, aggregates updates, and produces natural-language progress reports using configurable LLM backends. It targets open-source enthusiasts, individual developers, and investors who want to monitor project progress and hot technical topics without manual polling. The tool can run interactively from the command line, as a background daemon for scheduled checks, or via a Gradio web interface for GUI-based management.