gpt engineer

Basic Information

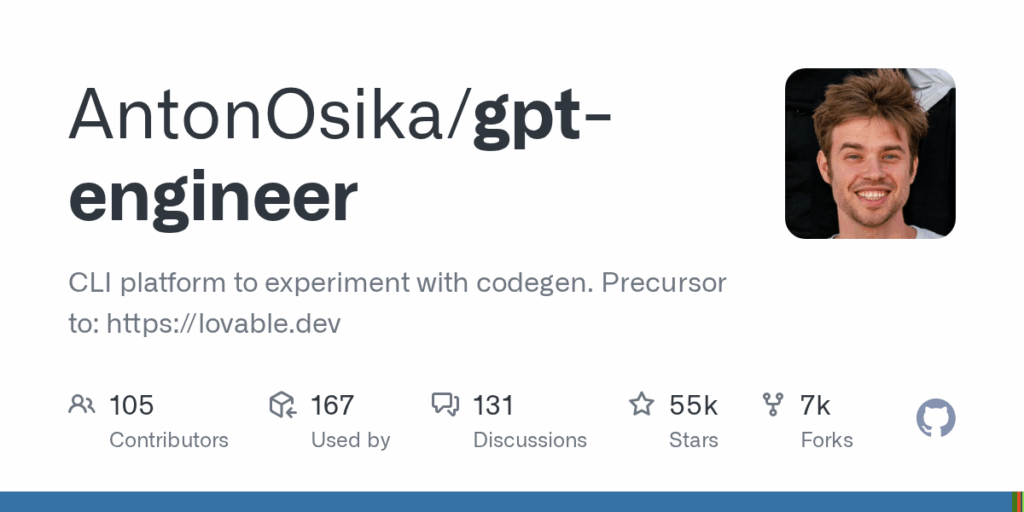

gpt-engineer is an open-source code generation experimentation platform and CLI that lets users specify software in natural language and have an AI write, run, and iteratively improve code. It is designed for developers and researchers building coding agents and agent workflows. The repository provides a command-line tool (gpte) to create new projects from a prompt file or to improve existing codebases. It supports vision-capable models for image inputs, multiple model backends including OpenAI, Azure, Anthropic and configurable local/open-source models, and offers Docker and Codespaces options for running the tool. The project also includes benchmarking utilities and community governance to support sustained development and collaboration.

Links

Stars

54712

Github Repository

Categorization

App Details

Features

Command-line interface gpte to create and improve code projects from a plain text prompt file. Customizable preprompts to define agent identity and lightweight memory across projects, with a flag to use custom preprompts. Support for vision-capable models by specifying an image directory as additional context. Compatibility with OpenAI, Azure OpenAI, Anthropic and instructions for running local open-source models. Installation options include pip package, development via git and poetry, Docker, and Codespaces. A bench binary and templates for benchmarking custom agent implementations against public datasets such as APPS and MBPP. Community governance, contribution guidelines, and a mission to support coding-agent builders.

Use Cases

For developers and researchers it accelerates prototyping of coding agents by turning natural-language specifications into runnable code and enabling iterative improvements. The CLI workflow standardizes how projects are created and improved, reducing setup friction and making experiments reproducible. Model-agnostic support and instructions for local models let teams evaluate different backends without major rewrites. Vision input support broadens use cases to include diagrams and UX context. Benchmarking tools let builders compare agent implementations on public datasets. Custom preprompts provide a simple mechanism for agent identity and persistent behavior changes. Docker, Codespaces and clear install steps make it easier to run in varied environments.