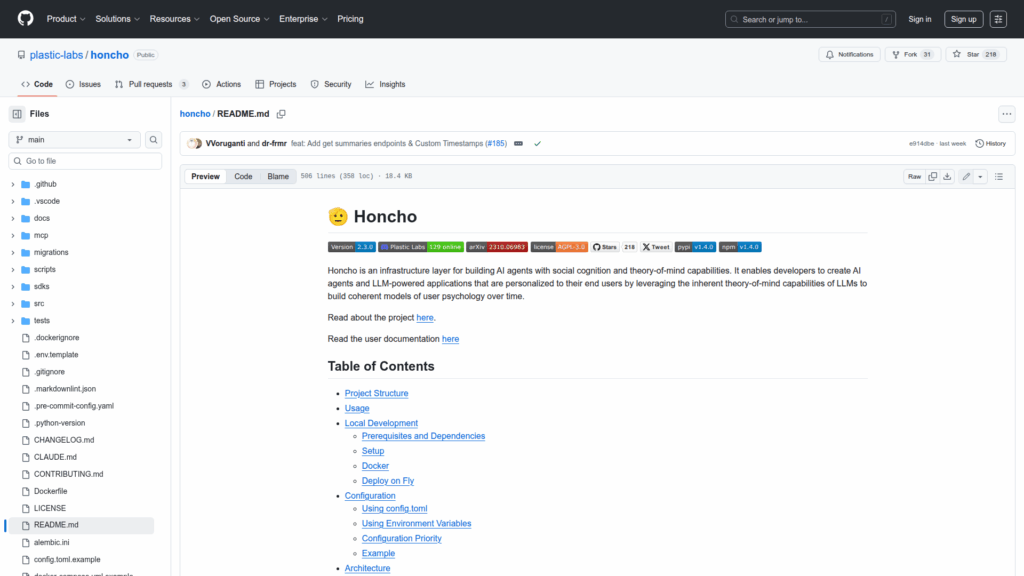

honcho

Basic Information

Honcho is an infrastructure service and API for building AI agents and LLM-powered applications that model user psychology using theory-of-mind concepts. The repository hosts the core FastAPI storage service that manages persistent application state for multi-tenant workspaces, peers, sessions, messages, collections and documents. It provides client SDKs for Python and TypeScript and core SDKs for advanced use. Honcho splits functionality into Storage and Insights layers so applications can store conversational and peer-level data, run asynchronous pipelines to derive representations and summaries, and query personalized insights via a dialectic chat endpoint. The project includes local development support, Docker compose templates, deployment samples, configuration via config.toml and environment variables, and guidance for database setup with Postgres and vector embeddings.