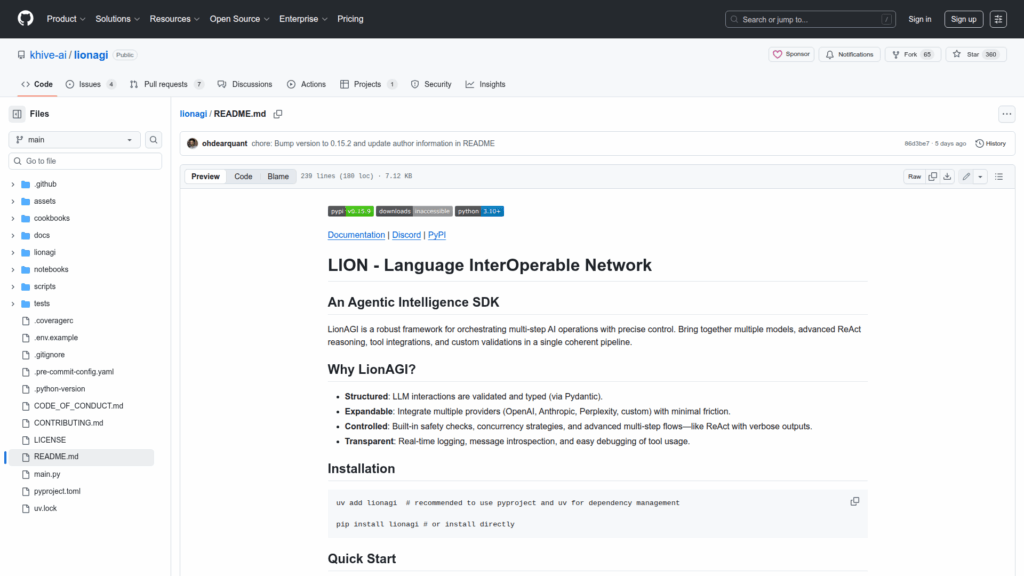

lionagi

Basic Information

LionAGI is an agentic intelligence SDK and orchestration framework designed to build and run multi-step AI workflows that combine multiple models, tools, and validations into coherent pipelines. It provides core primitives like Branch and iModel to manage conversation context and model instances, supports ReAct-style multi-step reasoning and tool invocation, and enforces structured, typed outputs via Pydantic. The project explicitly supports multiple providers (OpenAI, Anthropic, Perplexity and custom providers), Claude Code integration for persistent coding sessions, session auto-resume, concurrency strategies, streaming, and real-time observability. Optional extensions add reader tools for unstructured data, local inference via Ollama, database persistence for structured outputs, graph visualization, and rich console formatting. The README includes quick start examples, multi-model orchestration patterns, and cookbook-style usage for building parallelized fan-out/fan-in orchestrations and structured result handling.