llmchat

Basic Information

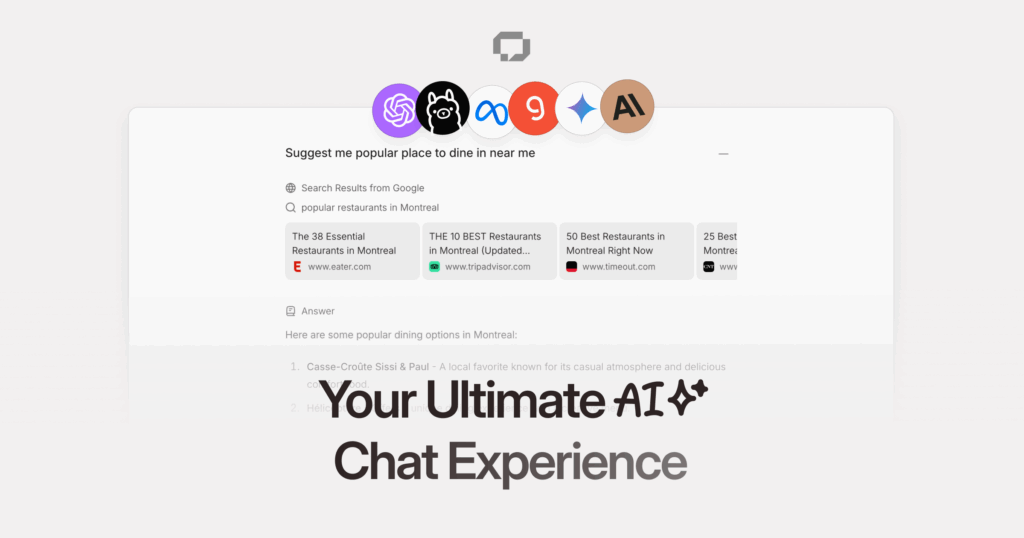

LLMChat is a privacy-focused, monorepo platform for building and running AI chat experiences and agentic research workflows. It provides a Next.js and TypeScript web and desktop application scaffold plus shared packages for AI integrations, orchestration, UI, and utilities. The repository is designed to let developers compose multi-step agent workflows (planning, information gathering, analysis, report generation) that coordinate LLM calls, emit typed events, and maintain a shared context. It emphasizes local-first storage of user data in the browser via IndexedDB (Dexie.js) so chat history does not leave the device. LLMChat supports multiple LLM providers and exposes an AI SDK and workflow builder to assemble reusable tasks and run research agents from development to local deployment.