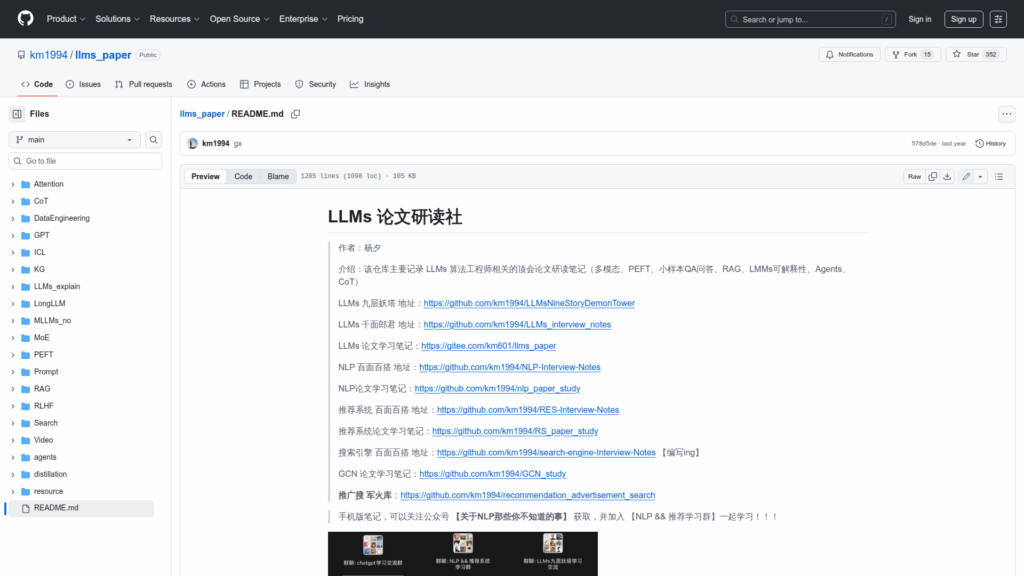

llms_paper

Basic Information

This repository, titled LLMs 论文研读社 and maintained by Yang Xi (km1994), is a curated, topic-organized collection of reading notes, summaries and pointers for research papers and resources about large language models. It focuses on areas relevant to LLM algorithm engineers such as multimodal models, PEFT (parameter-efficient fine-tuning), few-shot and document QA, retrieval-augmented generation (RAG), LMM interpretability, agents and chain-of-thought methods. The README aggregates paper metadata (titles, links, institutions), concise method descriptions, experimental highlights and related GitHub projects, and groups content into many thematic sections and subpages (for example GPT4Video, PEFT series, RAG tricks and application domains). The content is primarily in Chinese with links to original papers and code when available. The repo serves as a living bibliography and study guide for keeping up with recent LLM literature.