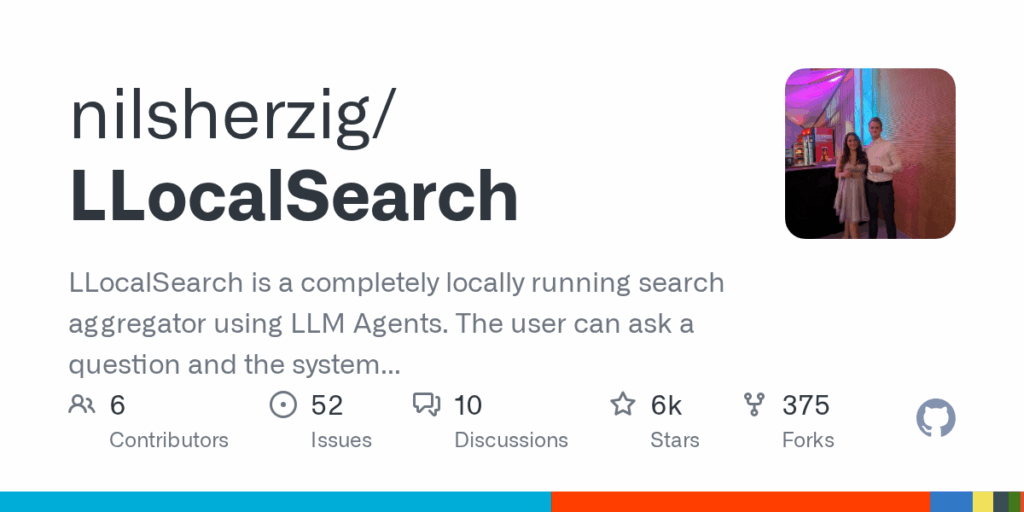

LLocalSearch

Basic Information

LLocalSearch is a local search agent application that wraps locally running large language models so they can choose and call external tools to find current internet information. The system lets an LLM recursively use tools to follow up on results, combine findings and return answers with live logs and source links. It is presented as a privacy-respecting alternative to cloud-hosted search assistants and is designed to run on modest consumer hardware. The README notes the project has not been actively developed for over a year and that the author is working on a private beta rewrite. The repository includes a Docker-based install path and guidance for connecting to a local model runtime called Ollama.

Links

Stars

5947

Github Repository

Categorization

App Details

Features

The project exposes an agent chain that enables tool use and recursive calls by a locally hosted LLM. Features documented include completely local operation without API keys, live logs and clickable links in answers to inspect sources, support for follow-up questions, a mobile-friendly responsive interface, and dark and light modes. The codebase uses a langchain integration and the author is working on fixes for LLama3 stop-word handling. The roadmap lists interface improvements, chat histories, user account groundwork, and long-term memory support via per-user vector namespaces. Installation instructions emphasize Docker Compose and an Ollama connection.

Use Cases

LLocalSearch helps users run a search-capable conversational agent on their own hardware to protect privacy and avoid third-party ranking or monetization biases. By letting the LLM call internet-search tools and show live logs and links, it makes answers more transparent and provides a practical starting point for deeper research. The ability to perform recursive tool calls and follow-up questions lets the agent refine queries and gather up-to-date information without external API keys. Docker-based deployment and low-hardware requirements aim to make it accessible to hobbyists and researchers. Planned features such as chat histories, user accounts, and persistent memory would enable personalized and repeatable research workflows.