Local-LLM-Langchain

Basic Information

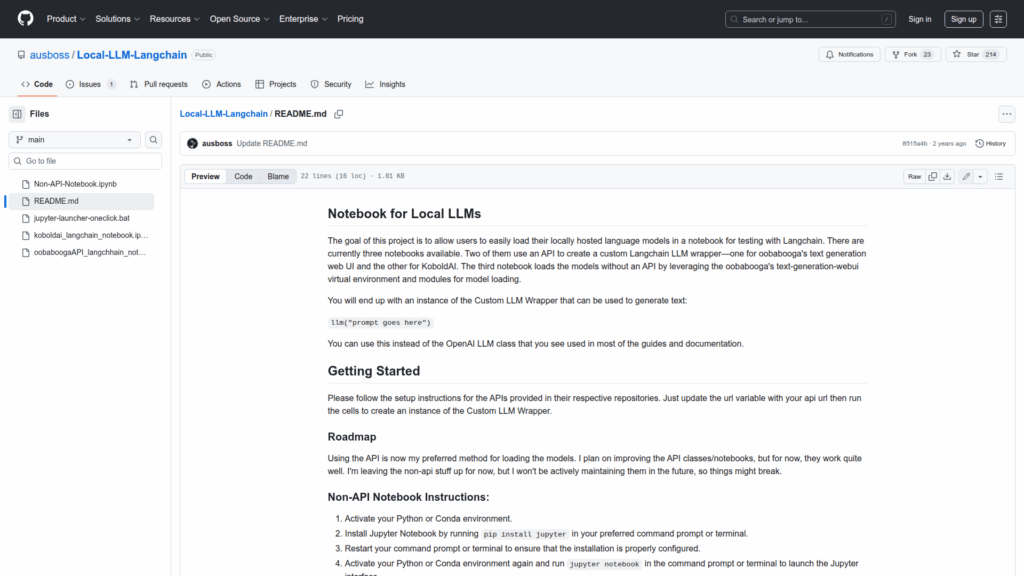

This repository provides Jupyter notebooks and example code to help users load locally hosted language models for testing and experimentation with Langchain. It includes three notebooks: two that create a custom Langchain LLM wrapper by communicating with model APIs and one that loads models directly without an API by using oobabooga's text-generation-webui virtual environment and modules. The notebooks target oobabooga's text generation web UI and KoboldAI as example backends. The end result is an instance of a Custom LLM Wrapper that can be called from notebook code (for example llm('prompt goes here')) and used in place of the OpenAI LLM class commonly seen in Langchain guides.

Links

Stars

213

Language

Github Repository

Categorization

App Details

Features

Three example Jupyter notebooks demonstrating API-based and non-API integration paths. API wrappers for oobabooga"s text-generation-webui and KoboldAI that produce a Custom LLM Wrapper compatible with Langchain usage. A non-API notebook that leverages the text-generation-webui virtual environment and Python modules to load models locally. Simple usage pattern where users update an api url variable and run cells to create an llm instance. Basic setup and Jupyter usage instructions are included. A roadmap note indicating API-based usage is preferred and will receive improvements while non-API examples may not be actively maintained.

Use Cases

The project makes it straightforward for developers and researchers to experiment with locally hosted language models inside a familiar Jupyter notebook workflow and integrate those models into Langchain pipelines. It allows replacing cloud LLM calls with a local Custom LLM Wrapper so existing Langchain examples and agent code can be tested offline or with private models. The API notebooks enable quick connection by changing a url variable, while the non-API notebook offers a path to load models directly for environments already running text-generation-webui. Included Jupyter setup steps reduce friction for getting started and the README clarifies that API-based integrations are the recommended path going forward.