microchain

Basic Information

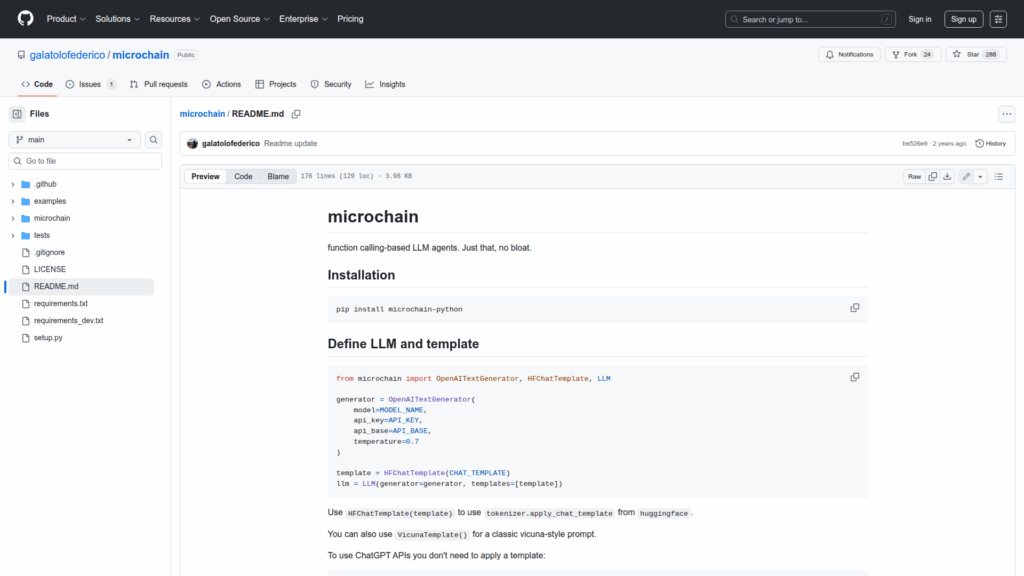

microchain is a lightweight Python library for building function-calling LLM agents with minimal overhead. It provides abstractions to connect language model generators to a small execution engine so developers can define callable functions as plain Python objects and expose them to an LLM. The README shows how to install via pip and instantiate generators for OpenAI chat and text APIs, use Hugging Face or Vicuna-style chat templates, and wrap generators into an LLM object. The library emphasizes explicit function definitions with type annotations, automated help text generation, and a simple Agent/Engine orchestration model where registered functions, prompt templates, and bootstrapped calls drive the agent behavior. The project includes examples and demonstrates step-by-step reasoning and deterministic function execution.