multimodal-agents-course

Basic Information

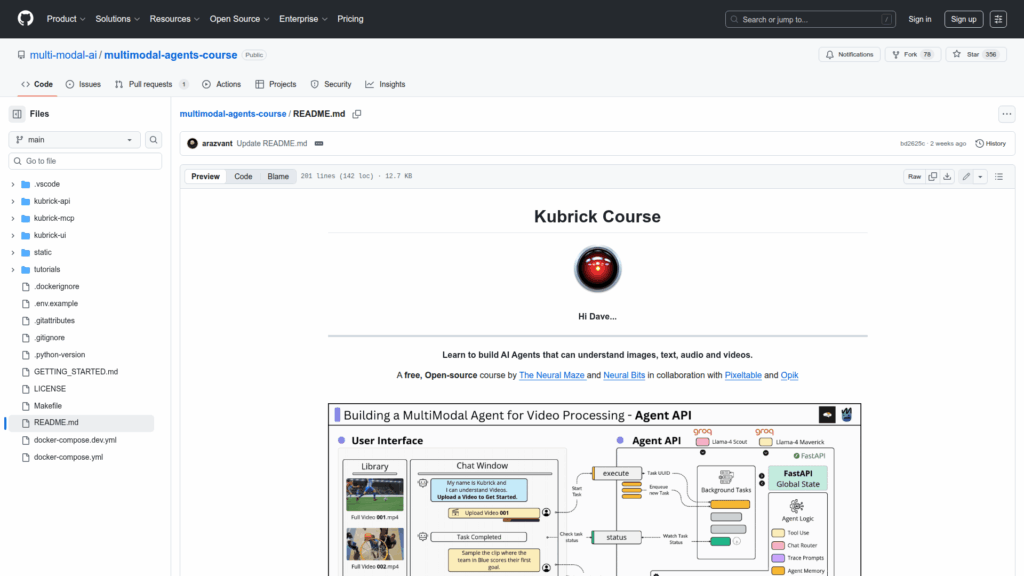

This repository is an open-source, hands-on course called the Kubrick Course that teaches developers how to build production-ready multimodal AI agents capable of understanding video, images, audio and text. The course focuses on building an MCP (Model Context Protocol) server for video processing, designing custom agent clients, and integrating multimodal pipelines and observability tools. It is aimed at engineers who want to move beyond simple tutorials and learn how to architect, implement and operate agentic systems that combine VLMs, LLMs, prompt versioning and streaming APIs. The materials include modular lessons, code examples, and a guided path to run a Kubrick agent that demonstrates video search, tool use, and end-to-end API integrations.