NanoLLM

Basic Information

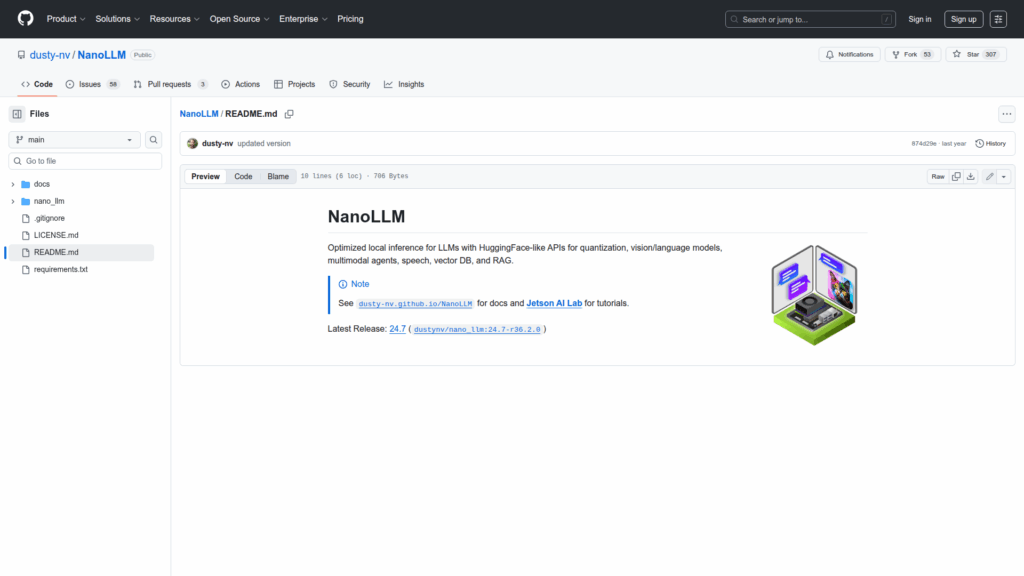

NanoLLM is a developer-focused project that provides optimized local inference for large language models and related multimodal systems. It offers HuggingFace-like APIs to make model loading and serving familiar to developers while focusing on efficient, local execution and quantization workflows. The repository targets use cases that combine language, vision, and speech by exposing interfaces for multimodal agents, vector database integration, and retrieval-augmented generation. Documentation and tutorials are provided through a dedicated docs site and a Jetson AI Lab tutorial resource. Releases are distributed with packaged images, including a Docker tag, to simplify deployment. The project is intended for practitioners who need running inference locally with support for quantized models, multimodal inputs, speech components, and retrieval pipelines.