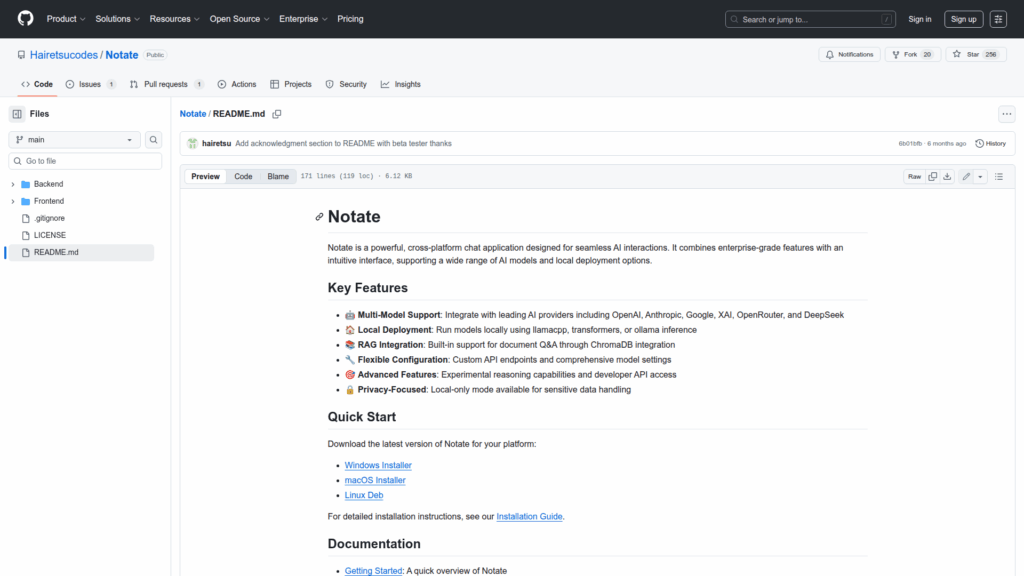

Notate

Basic Information

Notate is a cross-platform desktop chat application designed to provide seamless AI interactions for end users and developers. It centralizes access to multiple AI providers and local inference backends, enabling conversations with models from OpenAI, Anthropic, Google, XAI, OpenRouter, and DeepSeek as well as locally hosted models via llamacpp, transformers, or ollama. The app includes document question-and-answer workflows through RAG integration with ChromaDB and tools for ingesting files or URLs into FileCollections. It is packaged for Windows, macOS, and Linux installers and offers developer API access, configurable model endpoints and settings, experimental reasoning features, and a local-only mode for privacy-sensitive use. The repository contains frontend Electron project structure, build and distribution scripts, development run commands, system requirements, and user-facing documentation and screenshots.