open-assistant-api

Basic Information

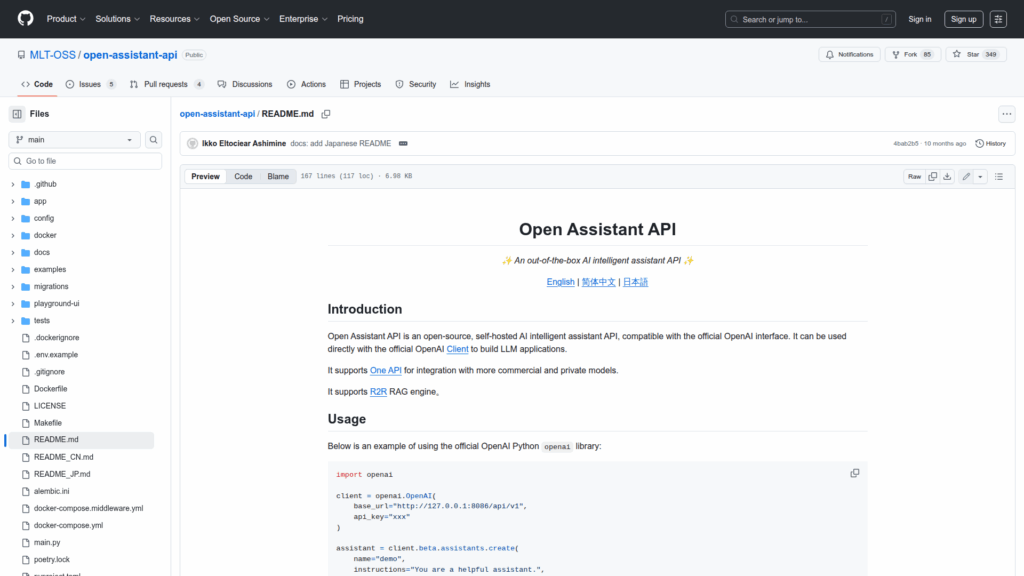

Open Assistant API is an open-source, self-hosted API for running AI assistants and building LLM applications. It implements an assistant API compatible with the official OpenAI interface so developers can use the OpenAI Python client and other OpenAI-compatible tools to create, configure, and run assistants. The project is targeted at teams or developers who want a locally deployable assistant backend that supports multiple LLMs via One API, integrates retrieval-augmented generation (RAG) engines such as R2R, and exposes REST/OpenAPI endpoints and example scripts. The README emphasizes easy startup with Docker Compose, configurable environment variables for API keys and RAG endpoints, and an examples directory demonstrating assistant creation, streaming, tools, and retrieval usage.