palico-ai

Basic Information

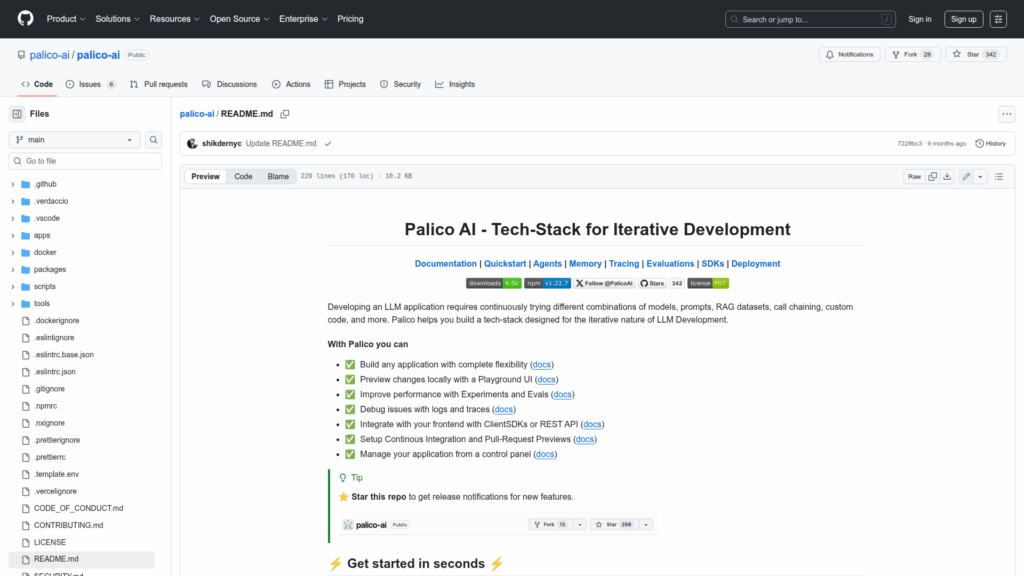

Palico AI is an opinionated tech stack and developer framework designed to build, iterate on, evaluate, and deploy LLM-powered applications. The README describes Palico as a toolkit for the iterative nature of LLM development that centralizes components such as agents, memory management, telemetry/tracing, experiments and evaluations, client SDKs, and deployment utilities. It provides primitives for implementing application logic (for example a Chat handler), local preview with a Playground UI, and first-party React support. The project emphasizes flexibility to choose models, prompts, retrieval datasets, and custom code while enabling systematic improvement cycles using test-cases, metrics, and experiment tooling. The repo includes documentation, quickstart commands, and examples to initialize projects and integrate with existing services and deployment pipelines. It targets developers building production LLM applications who need tooling for development, testing, observability, and rollout.