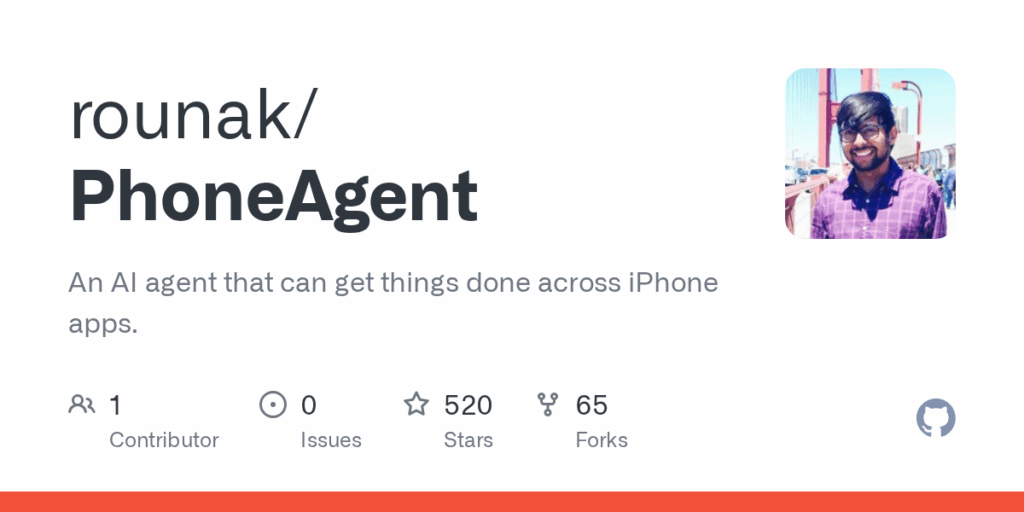

Features

The project exposes features that let a language model interact with the iPhone UI by reading an app"s accessibility tree and performing actions such as tapping specific elements, swiping and scrolling, typing into text fields, and launching other apps. Built-in tool actions include getting the current app contents, tapping a UI element, typing in a text field, and opening an app. The agent supports follow-up interactions via completion notifications and accepts voice input via a microphone button. An optional Always On mode listens for a wake word (Agent by default) even when the app is backgrounded. The prototype persists the OpenAI API key in the device keychain. Technically it uses Xcode UI tests and a TCP server for orchestration and is powered by gpt-4.1. The README notes potential extensions such as capturing screen images via XCTest APIs.

Use Cases

PhoneAgent is useful as a proof-of-concept personal assistant that can automate and coordinate tasks across multiple iPhone apps by simulating human interactions. It can execute natural language or voice commands to perform concrete tasks shown in examples, such as taking and sending selfies with a caption, downloading an app from the App Store, composing and sending messages with flight details, calling an Uber, or toggling system controls like the torch in Control Center. For developers and researchers it demonstrates how to connect OpenAI models to iOS UI tests to enable multi-step workflows, follow-up replies, and background wake-word listening. The README documents limitations such as keyboard input accuracy, animations confusing the model, and not waiting for long-running tasks, and it highlights privacy considerations because app contents are sent to OpenAI. Instructions to run the prototype are provided in the README.