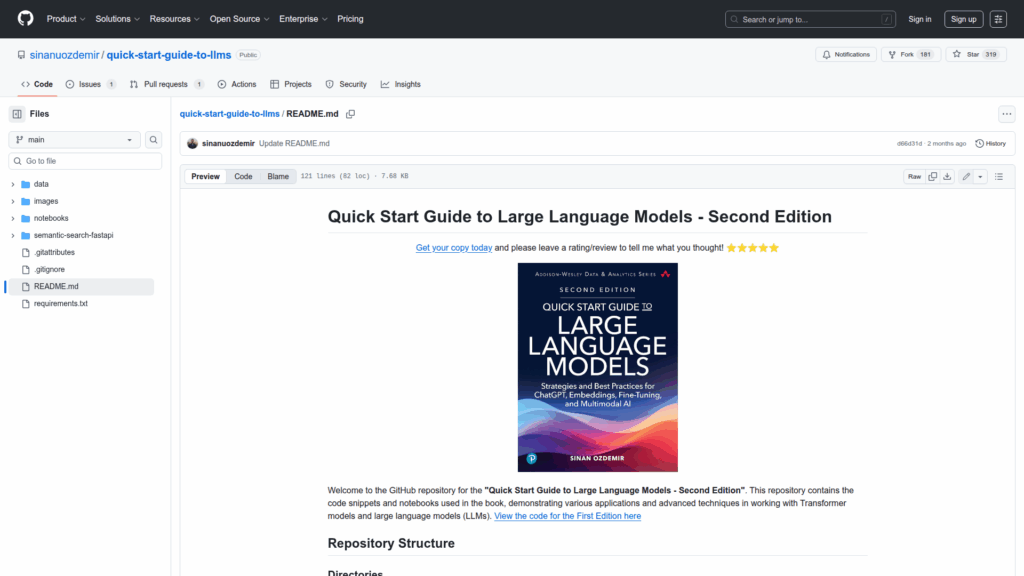

quick-start-guide-to-llms

Basic Information

This repository is the companion codebase for the book Quick Start Guide to Large Language Models - Second Edition. It provides the code snippets, Jupyter notebooks, datasets and images used to demonstrate practical workflows and advanced techniques for working with Transformer models and large language models. The notebooks are organized by book chapter and cover topics such as semantic search, prompt engineering, retrieval-augmented generation, agent construction, fine-tuning and reward modeling, embedding customization, recommendation systems, visual question answering, reinforcement learning for models, distillation and quantization for deployment, and evaluation and probing methods. The repo is intended for hands-on learning, reproducibility of examples in the book, and as a collection of applied recipes and experiments for practitioners and researchers working with LLMs.