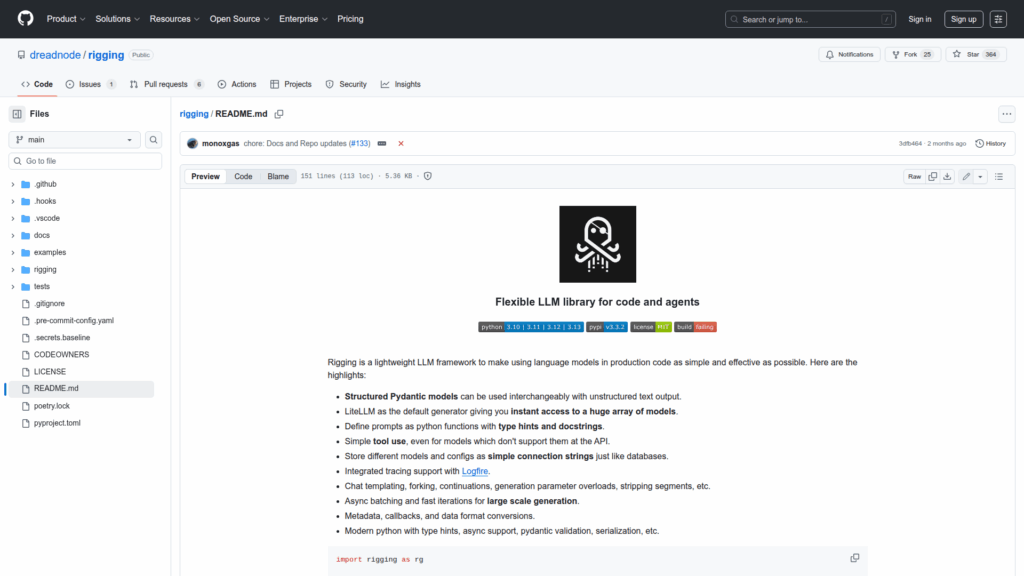

rigging

Basic Information

Rigging is a lightweight Python framework designed to make using large language models in production code simple and effective. It targets developers who need a structured way to call and manage LLMs, build chat or completion pipelines, and embed typed prompts directly in application code. The library emphasizes interchangeable structured Pydantic models and unstructured text, supports a wide array of model backends through a default LiteLLM generator as well as vLLM and transformers, and provides tools for prompt definition, tool invocation, tracing, and scaling. The README includes installation instructions via pip or building from source, examples for interactive chat, Jupyter code interpretation, RAG pipelines and agents, and guidance on configuring API keys and generators. The project is maintained by dreadnode and intended for production use in modern Python applications.