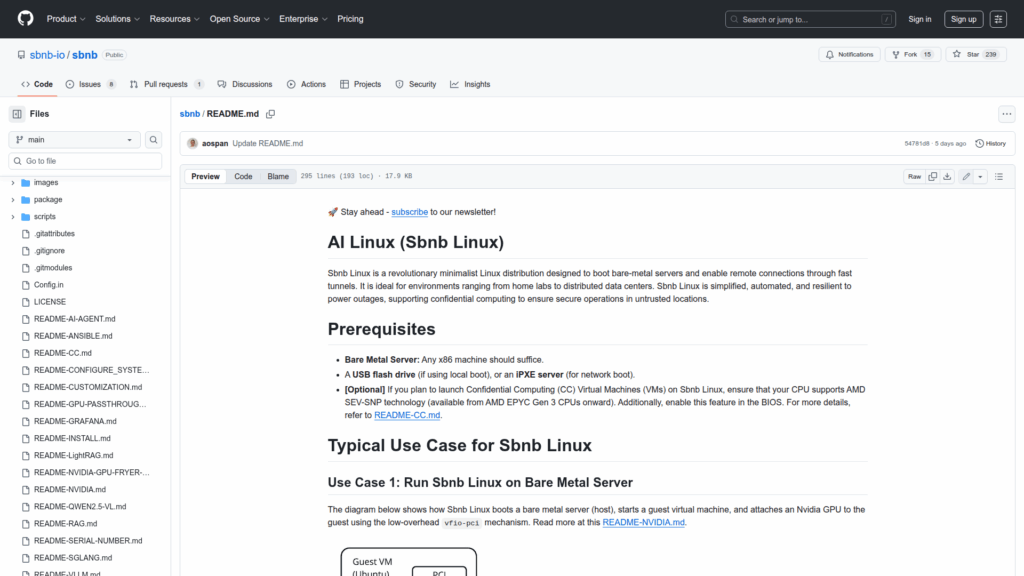

sbnb

Basic Information

Sbnb Linux is a minimalist, immutable Linux distribution designed to boot bare-metal servers and quickly enable remote access and AI workloads. It targets environments from home labs to distributed data centers and provides an operating environment that runs entirely in memory, establishes secure tunnels for remote management, and can host GPU-accelerated workloads inside containers or virtual machines. The project includes tooling and documentation to boot systems from USB or network iPXE, attach Nvidia GPUs to guests using vfio-pci, and deploy popular AI stacks such as vLLM, SGLang, RAGFlow, LightRAG, and Qwen2.5-VL. It supports Confidential Computing via AMD SEV-SNP when hardware permits and emphasizes immutable images, reproducible builds via Buildroot, and automation-friendly customizations for infrastructure as code workflows.