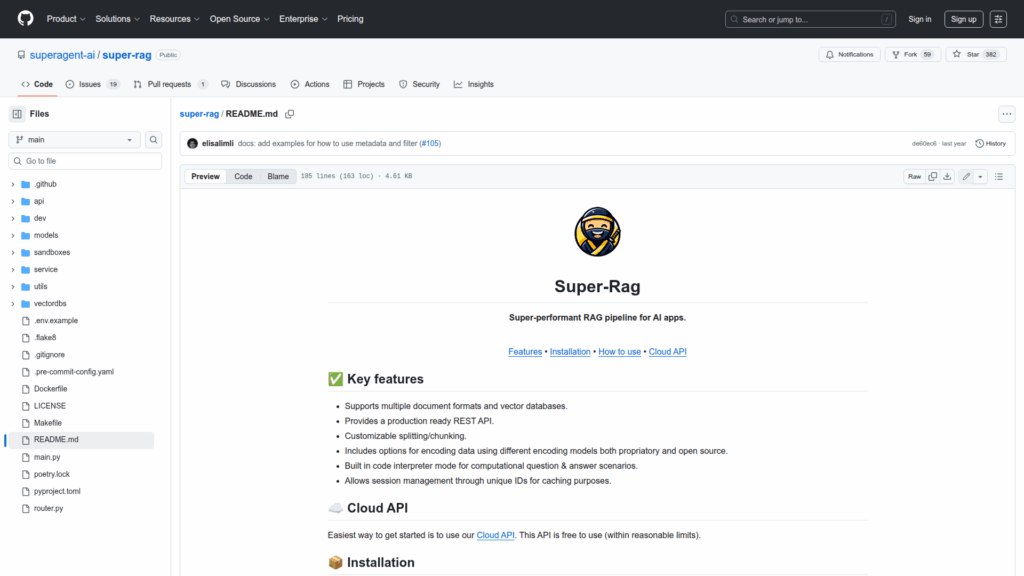

super-rag

Basic Information

Super-Rag is a developer-focused retrieval-augmented generation (RAG) pipeline for building AI applications that need scalable document ingestion, semantic search, and retrieval-backed question answering. The project provides a production-ready FastAPI REST API that accepts document ingestion requests, manages vector indexes, and serves query endpoints for retrieval and computation workflows. It supports multiple document formats, configurable splitting and chunking strategies, and pluggable encoders and vector databases. The code interpreter mode integrates with E2B.dev runtimes for computational Q&A. The README includes concrete API payload examples for ingest, query, and delete operations and instructions to run the service locally with a virtual environment and poetry. There is also an optional hosted Cloud API for quick starts. Overall the repo packages a full RAG pipeline with connectors and runtime options intended for developers deploying retrieval-backed AI features.