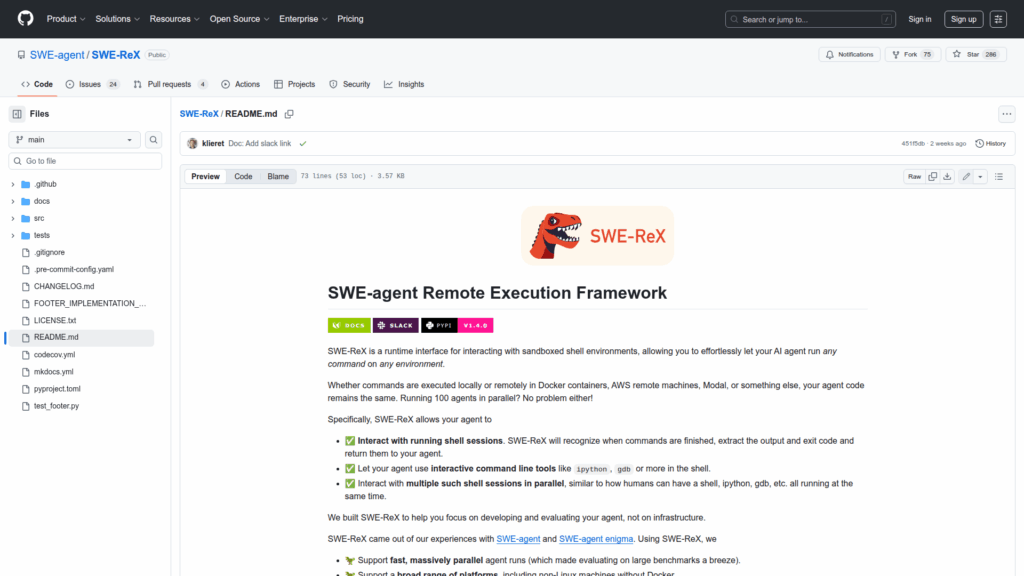

SWE-ReX

Basic Information

SWE-ReX is a runtime interface designed for developers building AI agents that need to execute shell commands in sandboxed environments. It provides a consistent API so agent code can run commands locally or remotely without changing logic. The repository targets scenarios where agents must interact with real shells or command line tools, including interactive programs, and where runs must scale to many parallel sessions. SWE-ReX was created from the SWE-agent project to simplify infrastructure concerns and to make it easy to run agents across Docker containers, cloud machines, Modal, and other execution backends. It focuses on enabling reproducible, massively parallel agent evaluation and execution while keeping agent implementation independent from the underlying environment. The project includes packaging and optional extras for different backends and points users to documentation for detailed setup and usage.