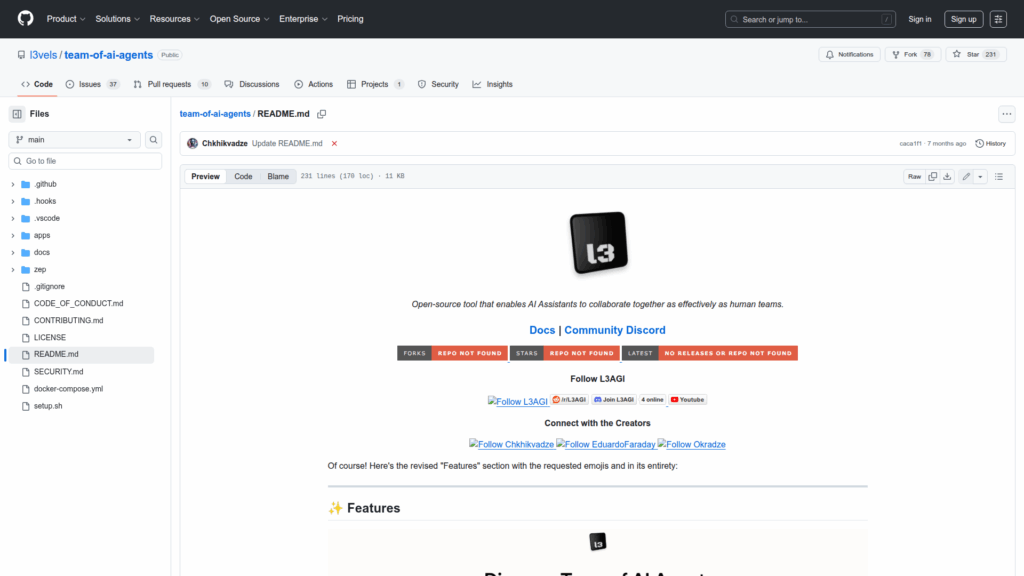

team-of-ai-agents

Basic Information

L3AGI (team-of-ai-agents) is an open-source platform for building, configuring, running and managing autonomous AI Assistants and coordinated Teams of Assistants. The repository provides a React UI and a Python FastAPI backend packaged with Docker and Docker Compose to simplify local deployment. It is intended for developers and teams who want to design collaborative agent workflows, give agents persistent memory, connect agents to external data sources and vector databases, and expose programmable APIs. The README documents a quick start, environment configuration, and architectural artifacts such as a database ERD. The project emphasizes integrations and tooling, including support for LlamaIndex, LangChain, Zep, Postgres and common web services, and offers a roadmap, community links and contributor information to help users extend or productionize multi-agent applications.